Namespaces | |

| detail | |

Classes | |

| class | future |

| Provides a way to access the result of an asynchronous operation. More... | |

| class | IoThreadPool |

| Represents a thread pool that shares a single io context across multiple threads. More... | |

| class | MultiServicePool |

| class | promise |

| Stores the result of an asynchronous operation. More... | |

| class | Scheduler |

| A scheduler. More... | |

| class | StrandedTimedCallback |

| class | StrandOwnerLifetimeExtender |

| Wraps a strand and automatically augments handlers to extend the lifetime of an owning object. More... | |

| struct | Task |

| A task that can be dispatched to the scheduler. More... | |

Typedefs | |

| using | TaskCallback = supplier< thread::future< TaskResult > > |

| Task callback that is invoked by the scheduler. More... | |

| using | DelayGenerator = supplier< utils::TimeSpan > |

| Supplier that generates delays. More... | |

Enumerations | |

| enum | TaskResult { TaskResult::Continue, TaskResult::Break } |

| Result of a task. More... | |

Functions | |

| template<typename T > | |

| future< T > | make_ready_future (T &&value) |

| Produces a future that is ready immediately and holds the given value. More... | |

| template<typename T , typename E > | |

| future< T > | make_exceptional_future (E ex) |

| Produces a future that is ready immediately and holds the given exception (ex). More... | |

| template<typename T > | |

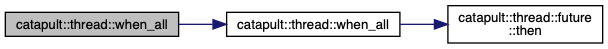

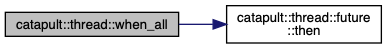

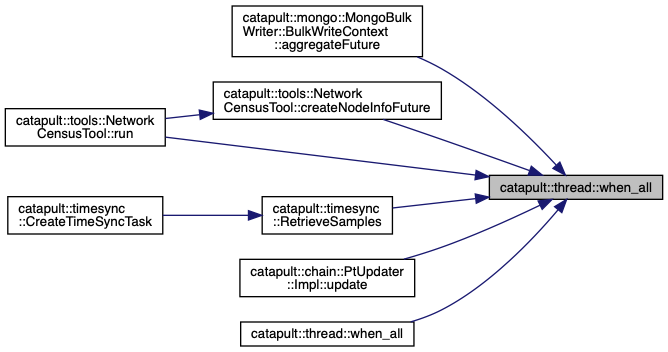

| future< std::vector< future< T > > > | when_all (std::vector< future< T >> &&allFutures) |

| Returns a future that is signaled when all futures in allFutures complete. More... | |

| template<typename T > | |

| future< std::vector< future< T > > > | when_all (future< T > &&future1, future< T > &&future2) |

| Returns a future that is signaled when both future1 and future2 complete. More... | |

| template<typename TSeed , typename TCreateNextFuture , typename TResultFuture = std::invoke_result_t<TCreateNextFuture, future<TSeed>&&>, typename TResultType = decltype(TResultFuture().get())> | |

| auto | compose (future< TSeed > &&startFuture, TCreateNextFuture createNextFuture) |

| template<typename T > | |

| std::vector< T > | get_all_ignore_exceptional (std::vector< future< T >> &&futures) |

| template<typename T > | |

| std::vector< T > | get_all (std::vector< future< T >> &&futures) |

| std::unique_ptr< IoThreadPool > | CreateIoThreadPool (size_t numWorkerThreads, const char *name) |

| template<typename TItems , typename TWorkCallback > | |

| thread::future< bool > | ParallelForPartition (boost::asio::io_context &ioContext, TItems &items, size_t numPartitions, TWorkCallback callback) |

| template<typename TItems , typename TWorkCallback > | |

| thread::future< bool > | ParallelFor (boost::asio::io_context &ioContext, TItems &items, size_t numPartitions, TWorkCallback callback) |

| std::shared_ptr< Scheduler > | CreateScheduler (const std::shared_ptr< IoThreadPool > &pPool) |

| Creates a scheduler around the specified thread pool (pPool). More... | |

| DelayGenerator | CreateUniformDelayGenerator (const utils::TimeSpan &delay) |

| Creates a uniform delay generator that always returns delay. More... | |

| DelayGenerator | CreateIncreasingDelayGenerator (const utils::TimeSpan &minDelay, uint32_t numPhaseOneRounds, const utils::TimeSpan &maxDelay, uint32_t numTransitionRounds) |

| Task | CreateNamedTask (const std::string &name, const TaskCallback &callback) |

| Creates an unscheduled task with name and callback. More... | |

| size_t | GetMaxThreadNameLength () |

| Gets the maximum supported thread name length (excluding NUL-terminator). More... | |

| void | SetThreadName (const std::string &name) |

| std::string | GetThreadName () |

| Gets a thread name in a platform-dependent way. More... | |

| template<typename TCallback , typename... TCallbackArgs> | |

| auto | MakeTimedCallback (boost::asio::io_context &ioContext, TCallback callback, TCallbackArgs &&... timeoutArgs) |

Typedef Documentation

◆ DelayGenerator

| using catapult::thread::DelayGenerator = typedef supplier<utils::TimeSpan> |

Supplier that generates delays.

◆ TaskCallback

| using catapult::thread::TaskCallback = typedef supplier<thread::future<TaskResult> > |

Task callback that is invoked by the scheduler.

Enumeration Type Documentation

◆ TaskResult

|

strong |

Function Documentation

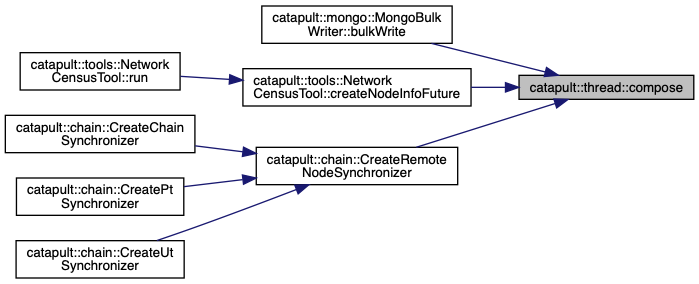

◆ compose()

| auto catapult::thread::compose | ( | future< TSeed > && | startFuture, |

| TCreateNextFuture | createNextFuture | ||

| ) |

Composes a future (startFuture) with a second future returned by a function (createNextFuture) that is passed the original future upon its completion.

- Note

- The future returned by this function will be the same type as the one returned by createNextFuture.

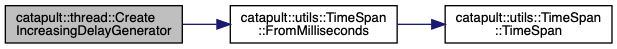

◆ CreateIncreasingDelayGenerator()

| DelayGenerator catapult::thread::CreateIncreasingDelayGenerator | ( | const utils::TimeSpan & | minDelay, |

| uint32_t | numPhaseOneRounds, | ||

| const utils::TimeSpan & | maxDelay, | ||

| uint32_t | numTransitionRounds | ||

| ) |

Creates a three-phase increasing delay generator:

- phase 1: minDelay for numPhaseOneRounds rounds

- phase 2: delay is linearly increased from minDelay to maxDelay over numTransitionRounds rounds

- phase 3: maxDelay for all other rounds

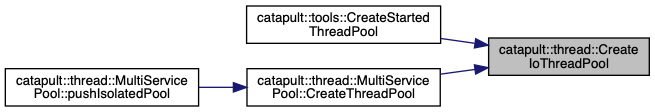

◆ CreateIoThreadPool()

| std::unique_ptr< IoThreadPool > catapult::thread::CreateIoThreadPool | ( | size_t | numWorkerThreads, |

| const char * | name = nullptr |

||

| ) |

Creates an io thread pool with the specified number of threads (numWorkerThreads) and the optional friendly name used in logging.

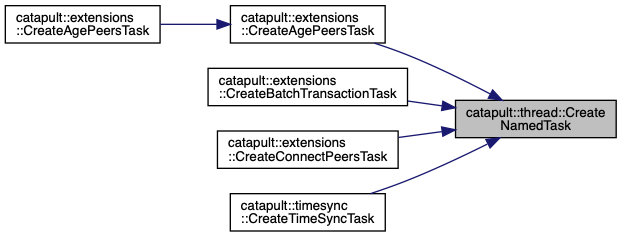

◆ CreateNamedTask()

| Task catapult::thread::CreateNamedTask | ( | const std::string & | name, |

| const TaskCallback & | callback | ||

| ) |

Creates an unscheduled task with name and callback.

◆ CreateScheduler()

| std::shared_ptr< Scheduler > catapult::thread::CreateScheduler | ( | const std::shared_ptr< IoThreadPool > & | pPool | ) |

Creates a scheduler around the specified thread pool (pPool).

◆ CreateUniformDelayGenerator()

| DelayGenerator catapult::thread::CreateUniformDelayGenerator | ( | const utils::TimeSpan & | delay | ) |

Creates a uniform delay generator that always returns delay.

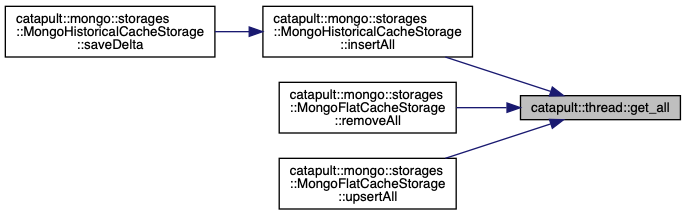

◆ get_all()

| std::vector<T> catapult::thread::get_all | ( | std::vector< future< T >> && | futures | ) |

Evaluates all futures and returns the results of all.

- Note

- This function will throw if at least one future has an exceptional result.

◆ get_all_ignore_exceptional()

| std::vector<T> catapult::thread::get_all_ignore_exceptional | ( | std::vector< future< T >> && | futures | ) |

Evaluates all futures and returns the results of all non-exceptional ones.

- Note

- Exceptional results are ignored and filtered out.

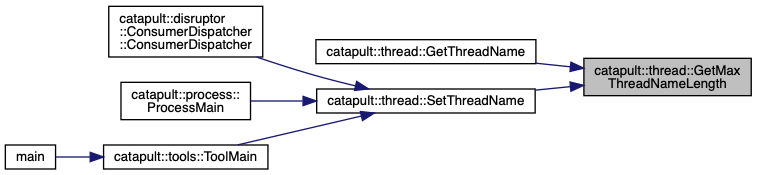

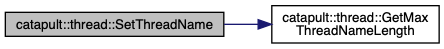

◆ GetMaxThreadNameLength()

| size_t catapult::thread::GetMaxThreadNameLength | ( | ) |

Gets the maximum supported thread name length (excluding NUL-terminator).

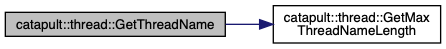

◆ GetThreadName()

| std::string catapult::thread::GetThreadName | ( | ) |

Gets a thread name in a platform-dependent way.

◆ make_exceptional_future()

| future<T> catapult::thread::make_exceptional_future | ( | E | ex | ) |

Produces a future that is ready immediately and holds the given exception (ex).

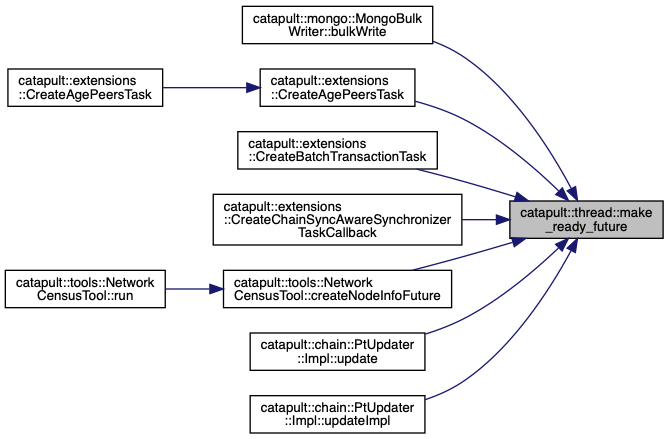

◆ make_ready_future()

| future<T> catapult::thread::make_ready_future | ( | T && | value | ) |

Produces a future that is ready immediately and holds the given value.

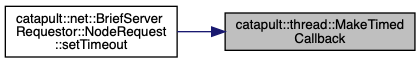

◆ MakeTimedCallback()

| auto catapult::thread::MakeTimedCallback | ( | boost::asio::io_context & | ioContext, |

| TCallback | callback, | ||

| TCallbackArgs &&... | timeoutArgs | ||

| ) |

Wraps callback with a timed callback using ioContext. On a timeout, the callback is invoked with timeoutArgs.

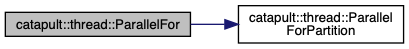

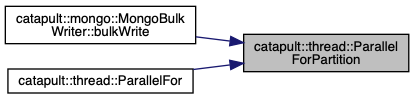

◆ ParallelFor()

| thread::future<bool> catapult::thread::ParallelFor | ( | boost::asio::io_context & | ioContext, |

| TItems & | items, | ||

| size_t | numPartitions, | ||

| TWorkCallback | callback | ||

| ) |

Uses ioContext to process items in numPartitions batches and calls callback for each item. A future is returned that is resolved when all items have been processed.

◆ ParallelForPartition()

| thread::future<bool> catapult::thread::ParallelForPartition | ( | boost::asio::io_context & | ioContext, |

| TItems & | items, | ||

| size_t | numPartitions, | ||

| TWorkCallback | callback | ||

| ) |

Uses ioContext to process items in numPartitions batches and calls callback for each partition. A future is returned that is resolved when all items have been processed.

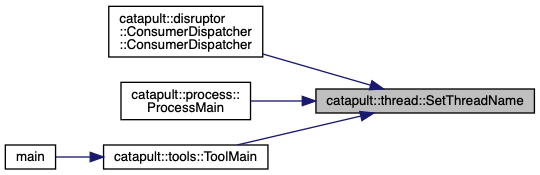

◆ SetThreadName()

| void catapult::thread::SetThreadName | ( | const std::string & | name | ) |

Sets a thread name in a platform-dependent way.

- Note

- Depending on platform capabilities, the name might be truncated.

◆ when_all() [1/2]

| future<std::vector<future<T> > > catapult::thread::when_all | ( | future< T > && | future1, |

| future< T > && | future2 | ||

| ) |

Returns a future that is signaled when both future1 and future2 complete.